Our customers cover over 2.8 billion users and include 7 of the top 10 largest banks

Discovery & Inventory

Continuous discovery and inventory of mobile, web, APIs and cloud assets

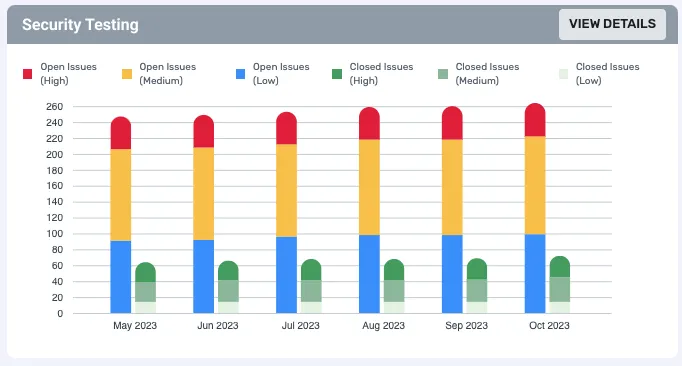

Security Testing

Automated hacking including SAST, DAST, IAST and SCA

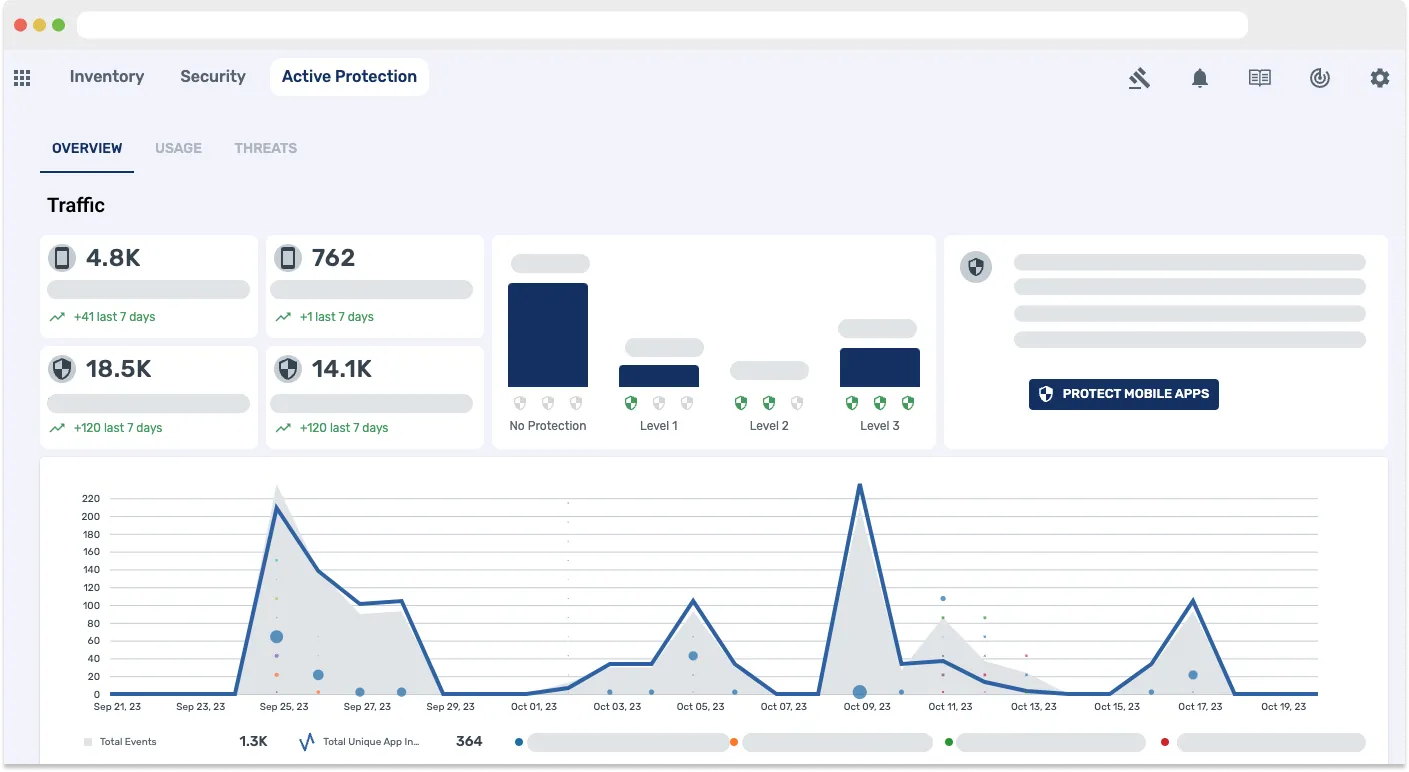

Active Defense

Real-time active protection and observability

What is Data Theorem

Prevent AppSec Data Breaches

Mobile Secure

iOS & Android: SAST, SCA, DAST, & Runtime

API Secure

Discovery, Security, & Runtime Protection

Web Secure

Test Web 2.0 & SPA

Cloud Secure

Monitor, hack, protect your Cloud-Native Apps

Code SAST Secure

SAST, SCA and SBOM

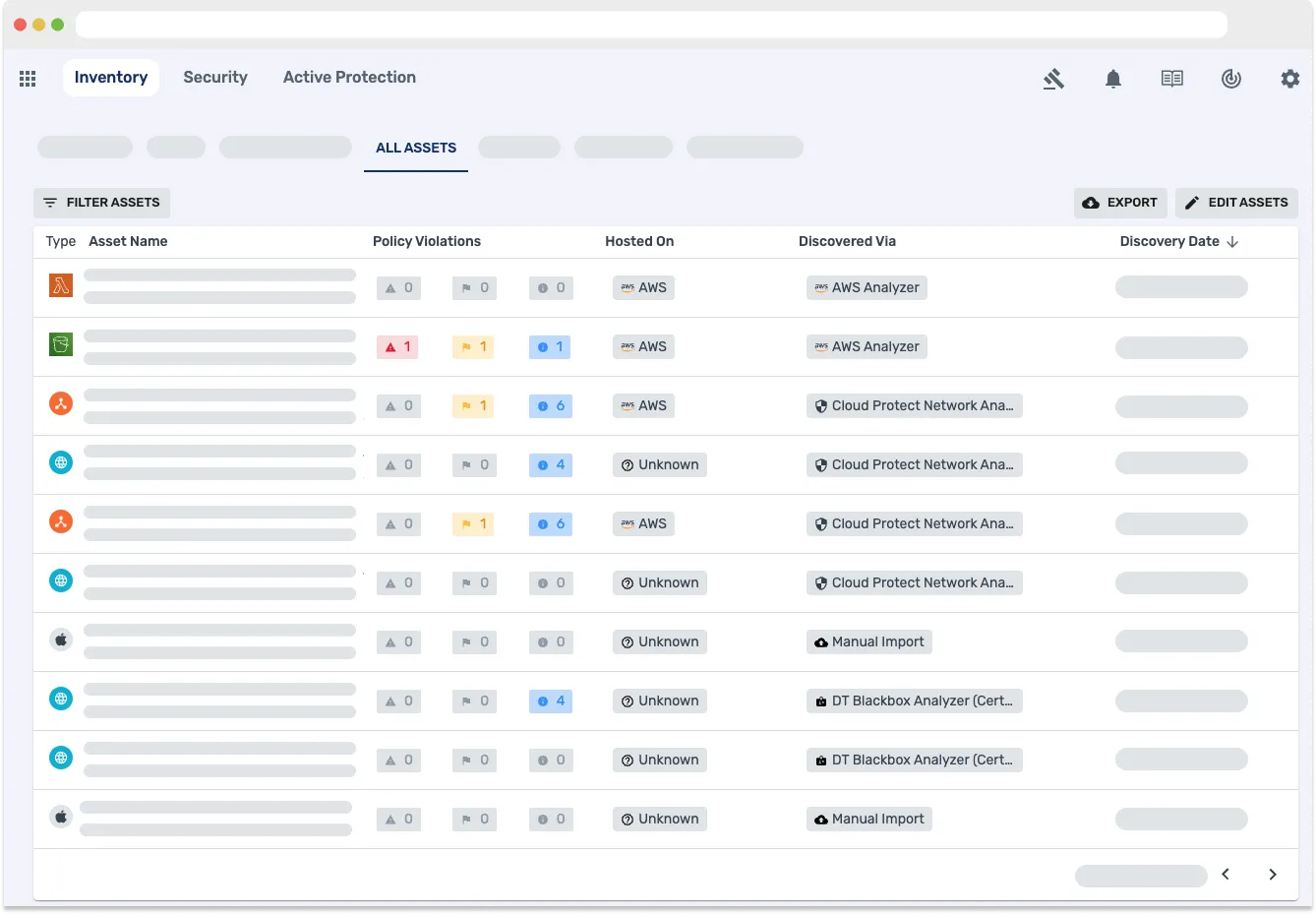

Inventory: Know your entire application attack surface

Continuous discovery and inventory of mobile, web, APIs and cloud assets. Stay updated on app and API changes and their security impacts.

Security Testing: Understand where your apps and APIs are vulnerable to attacks

Data Theorem provides robust AppSec testing via static and dynamic analysis with powerful hacker toolkits that identify threats across each layer of your app stack.

Runtime Protection: Visibility and active blocking of security threats

Data Theorem provides observability and telemetry with active blocking of real-time attacks across your app stack.

Secure your entire development lifecycle

Using Data Theorem’s modern application security platform, our customers have been able to scale their application security for today’s development models. Our customers cover over 2.8 billion users and include 5 of the top 7 largest banks.

Why Data Theorem?

Get Started with Data Theorem Today!

Learn More